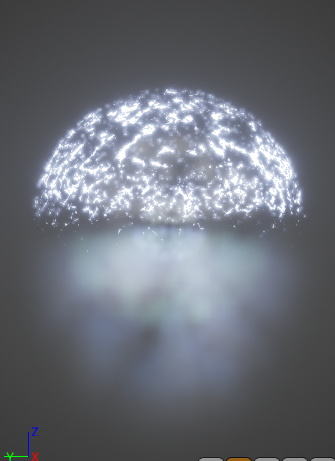

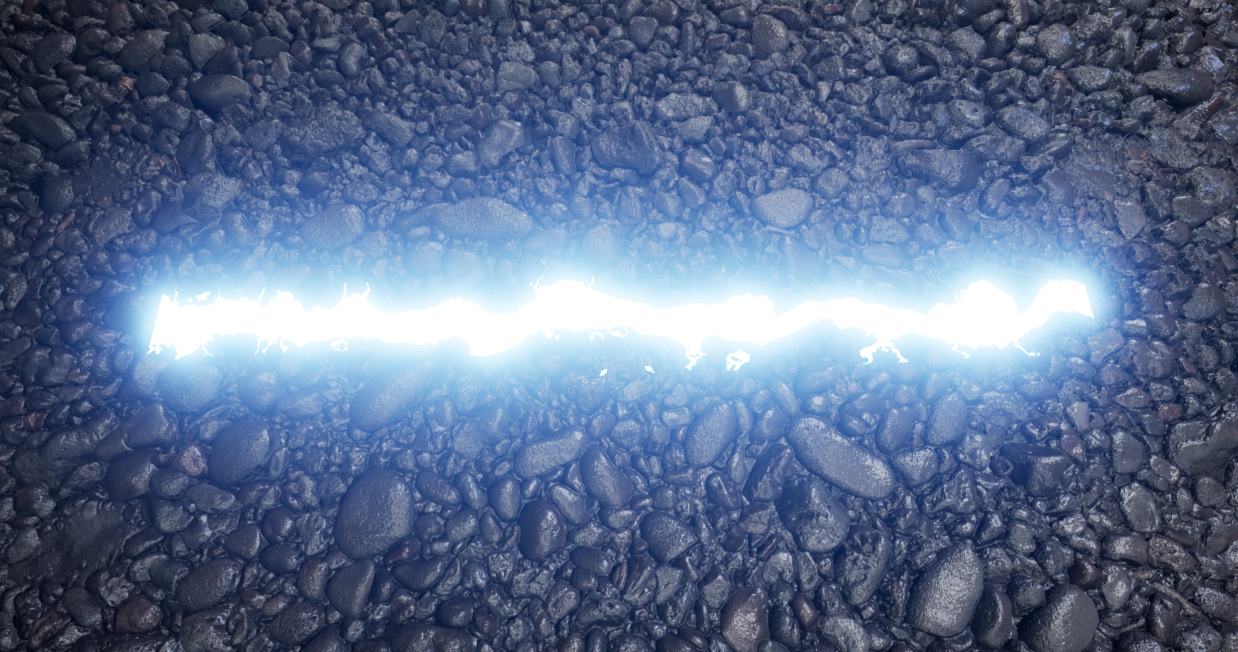

So I've been tinkering away at this project for some months now. I came across some images of the neural structure of a cochlea. I had the thought that it would be a good opportunity to delve into UE4's [still experimental] particle system --- Niagara. It ended up becoming something where I can explore various other methods for constructing the cochlea in its entirety, but emulating confocal microscopy being one of the ideas. The other is merging with a system that logically transforms soundwaves into the visual output; as well as being procedural enough so that a user can interface with it.

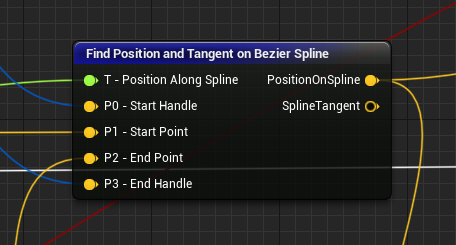

To start the primary simulation type is CPU based, i.e mesh-renderer and ribbon renderer. Mesh particles don't enable with GPUsim. So there's a lot of logic going on to generate the transforms for the neurons; Primarily constructing a control spline via functions I set up in blueprint, then accessing that in Niagara to work with the pertinent vectors.

On Somata & Optimization

One of the more immediate problems to solve relates to the most effective method for representing the shapes, without exceeding rendering budget. In the case of the neuron cell body I started off with pre-tessellated static mesh that roughly fits the limit, but it was at least 100 triangles for a decent amount of curvature. Times the number of particles/instances that adds up. Why not mesh LoDs? You'll immediately find that the most common method for LoDing (level of detail) -- reduced polycount versions of the original -- doesn't actually function in mesh particle data/space. In the current implement LOD-0 is the only thing that gets rendered, which means that's a no-go for managing triangles. So there's a number of other options.

I thought hey, why not just tessellate? Ok well the first point would be to have starting geometry of a low triangle count, that I can also morph into a sphere. I opted for an octahedron (tetrahedron should also work, but the base geometry would have a higher profile disparity at the lowest tessellation). 8 triangles, not bad. I enabled tessellation, piped a scalar in & checked wire-frame. Funnily enough wireframe mode doesn’t render tessellated triangles when the static mesh is in Niagara particle space. Cascade mesh particles are fine. We get to either use flat tessellation or PN triangles (spline based method that smooths). PN triangles don’t really work with mesh particles it seemed, & even if it would it's not like I would get the displacement controls I desired.

So flat tessellation, then some shader math. Vertex normals * .5, retrieve its vector length, cosine of that + n *x multiplied by the vertex normals. Then multiplied by the local scale. In the case of instances you can normally retrieve that scale by appending the lengths of the xyz vectors, which are transformed from local space into world space. However, the local-to-world transform function is only supported by vertex, compute or pixel shader. Since we're doing things in the domain/hull shader my workaround is to pass the transformed vector through the vertex shader (via customizedUV). Simple & effective in a variety of cases. That said if you're dealing with vec/float3s you'll need to utilize two customizeduv channels, since you unpack via a texcoord (which is a vec2).